Google Brain, an artificial intelligence and machine learning project at Google, has been used to power services like Android’s speech recognition system and photo search on Google+.

Google Brain, an artificial intelligence and machine learning project at Google, has been used to power services like Android’s speech recognition system and photo search on Google+.

Now, two of the most longstanding machine learning engineers, one of whom worked on Google Brain, have left the search giant to start a new company. The idea: to build machine learning, artificial… Read More

29

2014

Backed By Tencent And Felicis, Scaled Inference Wants To Be The Google Brain For Everyone

29

2014

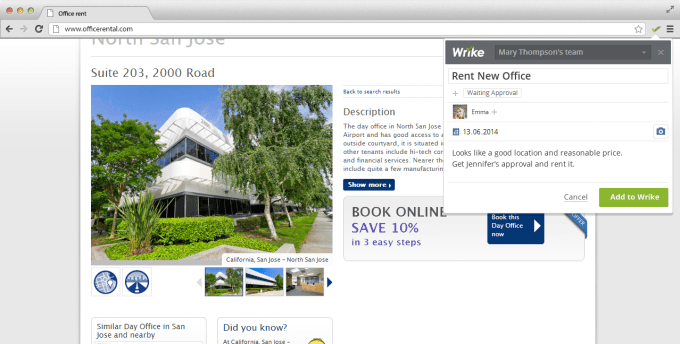

Project Management Platform Wrike Challenges Asana With New Workflow Tools

When it comes to web-based project management tools, we are spoiled for options these days. Popular ones include Asana, which coincidentally released its new iOS app today, Podio and Atlassian’s collaboration services. One lesser-known competitor is Wrike, a project management and collaboration service that raised $10 million from Bain Capital last year. The company is launching a… Read More

When it comes to web-based project management tools, we are spoiled for options these days. Popular ones include Asana, which coincidentally released its new iOS app today, Podio and Atlassian’s collaboration services. One lesser-known competitor is Wrike, a project management and collaboration service that raised $10 million from Bain Capital last year. The company is launching a… Read More

29

2014

Ford Plans To Replace BlackBerries With iPhones Beginning This Year

Ford is going to start switching its employees over to iPhone, beginning with moving 3,300 staffers from BlackBerry devices to iPhones by the end of the year. Over the course of the next two years, approximately 6,000 employees will get iPhones, replacing their existing flip phones, but ultimately the goal is to get everyone on Apple’s iPhone platform, a Ford spokesperson told Bloomberg. Read More

Ford is going to start switching its employees over to iPhone, beginning with moving 3,300 staffers from BlackBerry devices to iPhones by the end of the year. Over the course of the next two years, approximately 6,000 employees will get iPhones, replacing their existing flip phones, but ultimately the goal is to get everyone on Apple’s iPhone platform, a Ford spokesperson told Bloomberg. Read More

29

2014

Asana Doubles Down On Mobile, Releases New iOS App

Asana, a company that provides collaboration tools to corporations and groups, today released a unified iOS application for iPhone and iPad. As other companies have in recent quarters, Asana built its new iOS app using native code. (The app is due to land in the app store at any moment, so if you don’t see it in the App Store, hang tight.) The firm has an Android app similar to its new… Read More

Asana, a company that provides collaboration tools to corporations and groups, today released a unified iOS application for iPhone and iPad. As other companies have in recent quarters, Asana built its new iOS app using native code. (The app is due to land in the app store at any moment, so if you don’t see it in the App Store, hang tight.) The firm has an Android app similar to its new… Read More

29

2014

MxHero Grabs $500K To Close Seed Round

Email has always been a poor file manager and startup MxHero wants to change the way we manage email attachments. To that end, it announced it closed its seed round today with a $500K contribution. Funders include lead investor White Star Capital along with contributors GW Holdings, Esther Dyson, Ari Kushner, Actinic Ventures and a strategic Miami-based investor group they chose not to name.… Read More

Email has always been a poor file manager and startup MxHero wants to change the way we manage email attachments. To that end, it announced it closed its seed round today with a $500K contribution. Funders include lead investor White Star Capital along with contributors GW Holdings, Esther Dyson, Ari Kushner, Actinic Ventures and a strategic Miami-based investor group they chose not to name.… Read More

29

2014

BlackBerry Snags German Voice Encryption Firm Secusmart, But Years Too Late

In a move that makes a lot of sense, BlackBerry bought Secusmart today, a German firm that makes voice encryption chips for Blackberries and other mobile phones. Unfortunately, the deal is probably much too late to matter.

In a move that makes a lot of sense, BlackBerry bought Secusmart today, a German firm that makes voice encryption chips for Blackberries and other mobile phones. Unfortunately, the deal is probably much too late to matter.

The companies have worked closely in the past, so it’s probably not surprising that BlackBerry chose to pull the trigger and purchase them. Secusmart is used by… Read More

29

2014

Prevent MySQL downtime: Set max_user_connections

One of the common causes of downtime with MySQL is running out of connections. Have you ever seen this error? “ERROR 1040 (00000): Too many connections.” If you’re working with MySQL long enough you surely have. This is quite a nasty error as it might cause complete downtime… transient errors with successful transactions mixed with failing ones as well as only some processes stopping to run properly causing various kinds of effects if not monitored properly.

There are number of causes for running out of connections, the most common ones involving when the Web/App server is creating unexpectedly large numbers of connections due to a miss-configuration or some script/application leaking connections or creating too many connections in error.

The solution I see some people employ is just to increase max_connections to some very high number so MySQL “never” runs out of connections. This however can cause resource utilization problems – if a large number of connections become truly active it may use a lot of memory and cause the MySQL server to swap or be killed by OOM killer process, or cause very poor performance due to high contention.

There is a better solution: use different user accounts for different scripts and applications and implement resource limiting for them. Specifically set max_user_connections:

mysql> GRANT USAGE ON *.* TO 'batchjob1'@'localhost'

-> WITH MAX_USER_CONNECTIONS 10;

This approach (available since MySQL 5.0) has multiple benefits:

Security – different user accounts with only required permissions make your system safer from development errors and more secure from intruders

Preventing Running out of Connections – if there is a bug or miss-configuration the application/script will run out of connections of course but it will be the only part of the system affected and all other applications will be able to use the database normally.

Overload Protection – Additional numbers of connections limits how much queries you can run concurrently. Too much concurrency is often the cause of downtime and limiting it can reduce the impact of unexpected heavy queries running concurrently by the application.

In addition to configuring max_user_connections for given accounts you can set it globally in my.cnf as “max_user_connections=20.” This is too coarse though in my opinion – you’re most likely going to need a different number for different applications/scripts. Where max_user_connections is most helpful is in multi-tenant environments with many equivalent users sharing the system.

The post Prevent MySQL downtime: Set max_user_connections appeared first on MySQL Performance Blog.

29

2014

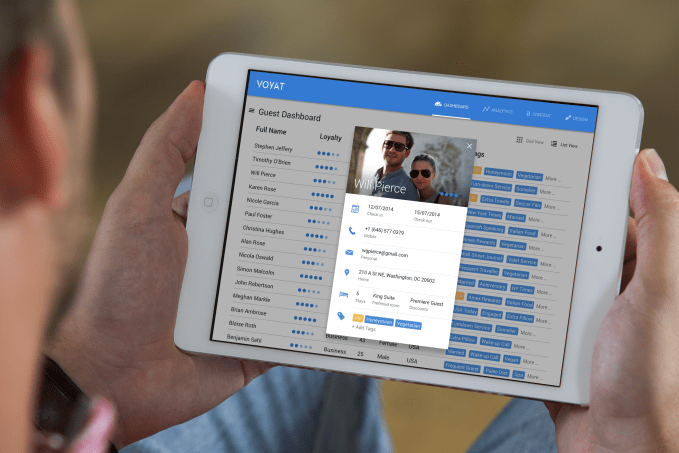

Voyat Launches With $1.8M In Seed Funding For Social CRM Tool Aimed At Hotels

Voyat, a social CRM tool aimed at the hotel industry, came out of stealth today and announced $1.8M in seed funding from Metamorphic Ventures, Eniac Ventures, BoxGroup and several angel investors, including Brett Crosby, who was co-founder of Google Analytics. Metamorpic Ventures is led by David Hirsch, who worked out of Google’s New York office for 8 years. These Google connections… Read More

Voyat, a social CRM tool aimed at the hotel industry, came out of stealth today and announced $1.8M in seed funding from Metamorphic Ventures, Eniac Ventures, BoxGroup and several angel investors, including Brett Crosby, who was co-founder of Google Analytics. Metamorpic Ventures is led by David Hirsch, who worked out of Google’s New York office for 8 years. These Google connections… Read More

28

2014

Sunstone Capital Backs Beacon Platform Kontakt.io To The Tune Of $2 Million

“Bluetooth Low Energy and iBeacon are the building blocks of the next wave of computing,” says Max Niederhofer of the micro-location technology that lets your smartphone trigger events based on how close you are to a Beacon transmitter. “It’s a cliché, but the possibilities are endless.” Read More

“Bluetooth Low Energy and iBeacon are the building blocks of the next wave of computing,” says Max Niederhofer of the micro-location technology that lets your smartphone trigger events based on how close you are to a Beacon transmitter. “It’s a cliché, but the possibilities are endless.” Read More

28

2014

What I learned while migrating a customer MySQL installation to Amazon RDS

Hi, I recently had the experience of assisting with a migration of a customer MySQL installation to Amazon RDS (Relational Database Service). Amazon RDS is a great platform for hosting your MySQL installation and offers the following list of pros and cons:

- You can scale your CPU, IOPS, and storage space separately by using Amazon RDS. Otherwise you need to take downtime and upgrade physical components of a rack-mounted server.

- Backups, software version patching, failure detection, and (some) recovery is automated with Amazon RDS.

- You lose shell access to your DB instance

- You lose SUPER privilege for regular users. Many SUPER-type statements and commands are provided for as a Stored Procedure.

- It is easy to set up multiple read replicas (slaves in READ_ONLY=1 mode).

- You can set up a secondary sychronous instance for failover in the event your primary instance fails.

While this article is written to be Amazon RDS-specific it also has implications for any sort of migration.

- The only way to interface with RDS is through mysql client, which means loading data must be done using SQL. This means you need to use mysqldump or mydumper, which can be a large endeavour should your dataset be north of 500GB — this is a lot of single threaded activity! Think about not only how long dumping and loading will take, but also factor in how much time it will take for replication to catch up on the hours/days/weeks your dumping and loading procedure took. You might need to allocate more disk space and Provisioned IOPS to your RDS node in order to improve disk throughput, along with a change to innodb_flush_log_at_trx_commit, and sync_binlog.

- RDS is set to UTC (system_time_zone=UTC) and this cannot be changed as in Parameter Groups you will see that default_time_zone is set as Modifiable=false. This can bite you if you are planning to use RDS as a slave for a short while before failing the application over to Amazon RDS. If you have configured binlog_format=STATEMENT on your master and you have TIMESTAMP columns, this will lead to differences in RDS data set for absolute values ’2014-07-24 10:15:00′ vs NOW(). It is also a concern for the Developer who may not be explicitly declaring their MySQL connections to set an appropriate time zone. Often the best piece of advice can be to leave all database data in UTC no matter where the server is physically located, and deal with localization at the presentation layer.

- Amazon RDS by default has max_allowed_packet=1MB. This is pretty low as most other configs are 16MB so if you’re using extended-insert (by default, you are), the size of each insert statement will be close to 16MB and thus can lead to errors related to “packet too big” on Amazon RDS side, thus failing out an import.

- Amazon RDS does not support the SUPER privilege for regular users. For example, this becomes quite a challenge as many tools (Percona Toolkit) are authored to assume you have SUPER-level access on all nodes — simple tasks become vastly more complicated as you need to think of clever workarounds (I’m looking at you pt-table-sync!).

- Triggers and views thus cannot be applied using the default mysqldump syntax which includes SQL DEFINER entries — these lines are there so that a user with SUPER can “grant” another user ability to execute the trigger/view. Your load will fail if you forget this.

- Consider running your load with –force to the mysql client, and log to disk stderr/stdout so you can review errors later. It is painful to spend 4 days loading a 500GB database only to have it fail partially through because you forgot about SQL DEFINER issue..

- Consider splitting the mysqldump into two phases: –no-data so you dump schema only, and then –data-only so you get just the rows. This way you can isolate faults and solve them along the way.

- Skipping replication events is SLOW. You don’t have ability to do sql_slave_skip_counter (since this requires SUPER), instead you need to use an Amazon RDS function of mysql.rds_skip_repl_error. Sadly this Stored Procedure takes no argument and thus it only skips one event at a time. It takes about 2-3 seconds for each execution, so if you have a lot of events to skip, that’s a problem. Having to skip ANYTHING is indication that something went wrong in the process, so if you find yourself in the unenviable position of skipping events, know that pt-table-checksum should be able to give you an idea how widespread is the data divergence issue.

- pt-table-sync doesn’t work against Amazon RDS as it is written to expect SUPER because it wants to do binlog_format=STATEMENT in session, but that’s not allowed. Kenny Gryp hacked me a version to just skip this check, and Kenny also reported it for inclusion in a future release of Percona Toolkit, but in the meantime you need to work around the lack of SUPER privilege.

- pt-table-sync is SLOW against RDS. As pt-table-sync doesn’t log a lot of detail about where time is spent, I haven’t completely isolated the source of the latency, but I suspect this is more about network round trip than anything else.

- innodb_log_file_size is hardcoded to 128MB in Amazon RDS, you can’t change this. innodb_log_files_in_group is not even showing up in Parameter Groups view but SHOW GLOBAL VARIABLES reports as 2. So you’re cookin’ on 256MB, if your writes are heavy this may become a bottleneck with little workaround available in MySQL.

- CHANGE MASTER isn’t available in RDS. You define RDS as a slave by calling a stored procedure where you pass the appropriate options such as CALL mysql.rds_set_external_master.

For those of you wondering about the SUPER-privilege, I was fortunate that Bill Karwin from Percona’s Support team took the time to review my post and suggested I dig into this deeper, turns out that Amazon didn’t hack MySQL to remove the SUPER privilege, but instead run the Stored Procedures with security_type of DEFINER:

mysql> select db,name,type,language,security_type,definer from proc where name = 'rds_external_master' G *************************** 1. row *************************** db: mysql name: rds_external_master type: PROCEDURE language: SQL security_type: DEFINER definer: rdsadmin@localhost 1 row in set (0.08 sec)

mysql> show grants for 'rdsadmin'@'localhost'; +------------------------------------------------------------------------------------------------------+ | Grants for rdsadmin@localhost | +------------------------------------------------------------------------------------------------------+ | GRANT ALL PRIVILEGES ON *.* TO 'rdsadmin'@'localhost' IDENTIFIED BY PASSWORD 'XXX' WITH GRANT OPTION | +------------------------------------------------------------------------------------------------------+ 1 row in set (0.07 sec)

So for those of you working with Amazon RDS, I hope that this list saves you some time and helps our your migration! If you get stuck you can always contact Percona Consulting for assistance.

The post What I learned while migrating a customer MySQL installation to Amazon RDS appeared first on MySQL Performance Blog.