In this blog post, I’ll look for the bottleneck that prevented the performance in my previous post from achieving better results.

The powerful machine I used in the tests in my previous post has a comparatively slow disk, and therefore I expected my tests would hit a point when I couldn’t increase performance further due to the disk speed.

Hardware configuration:

Processors: physical = 4, cores = 72, virtual = 144, hyperthreading = yes

Memory: 3.0T

Disk speed: about 3K IOPS

OS: CentOS 7.1.1503

File system: XFS

Versions tested and configuration: same as in the first post of this series (check the post for specifics).

Even though I expected my tests would stop increasing in performance due to the disk speed, I did not observe high IO rates in the

iostat

output. I already tested with a full data set that fits in memory. In this case, write performance only affected data flushes and log writes. But we should still see a visible decrease in speed. So I decided to try RW tests totally in memory. I created a ramdisk and put the MySQL datadir on it. Surprisingly, results on the SSD and ramdisk did not differ.

I asked my colleagues from “Postgres Professional” to test PostgreSQL with the ramdisk. They got similar results:

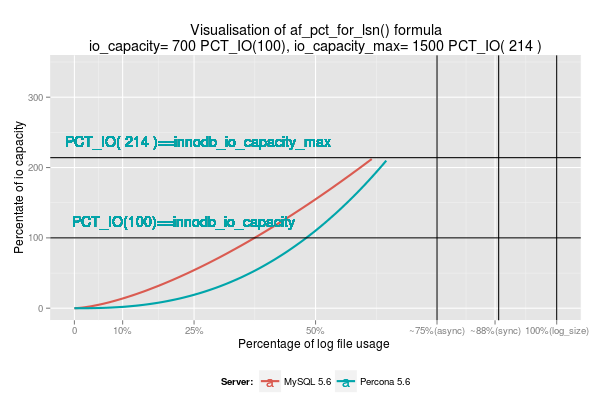

It’s interesting that the value of

innodb_io_capacity

does not have any effect on this situation. Data for the graph below was taken when I ran tests on ramdisk. I wanted to see if I could control the IO activity of a disk, which is extremely fast by default, using this variable.

This totally contradicts all my past experiences with smaller machines. Percona re-purposed the machine with a faster disk (which I used before, described in this post), so I used a similar one with slower disk speed.

Hardware configuration:

Processors: physical = 2, cores = 12, virtual = 24, hyperthreading = yes

Memory: 47.2G

Disk speed: about 3K IOPS

OS: Ubuntu 14.04.5 LTS (trusty)

File system: ext4

Again, in this case

innodb_io_capacity

benchmarks with a smaller number of CPU cores showed more predictable results.

Conclusion:

Both MySQL and PostgreSQL on a machine with a large number of CPU cores hit CPU resources limits before disk speed can start affecting performance. We only tested one scenario, however. With other scenarios, the results might be different.