As Nvidia continues to work through its deal to acquire Arm from SoftBank for $40 billion, the computing giant is making another big move to lay out its commitment to investing in U.K. technology. Today the company announced plans to develop Cambridge-1, a new £40 million AI supercomputer that will be used for research in the health industry in the country, the first supercomputer built by Nvidia specifically for external research access, it said.

Nvidia said it is already working with GSK, AstraZeneca, London hospitals Guy’s and St Thomas’ NHS Foundation Trust, King’s College London and Oxford Nanopore to use the Cambridge-1. The supercomputer is due to come online by the end of the year and will be the company’s second supercomputer in the country. The first is already in development at the company’s AI Center of Excellence in Cambridge, and the plan is to add more supercomputers over time.

The growing role of AI has underscored an interesting crossroads in medical research. On one hand, leading researchers all acknowledge the role it will be playing in their work. On the other, none of them (nor their institutions) have the resources to meet that demand on their own. That’s driving them all to get involved much more deeply with big tech companies like Google, Microsoft and, in this case, Nvidia, to carry out work.

Alongside the supercomputer news, Nvidia is making a second announcement in the area of healthcare in the U.K.: it has inked a partnership with GSK, which has established an AI hub in London, to build AI-based computational processes that will be used in drug vaccine and discovery — an especially timely piece of news, given that we are in a global health pandemic and all drug makers and researchers are on the hunt to understand more about, and build vaccines for, COVID-19.

The news is coinciding with Nvidia’s industry event, the GPU Technology Conference.

“Tackling the world’s most pressing challenges in healthcare requires massively powerful computing resources to harness the capabilities of AI,” said Jensen Huang, founder and CEO of Nvidia, in his keynote at the event. “The Cambridge-1 supercomputer will serve as a hub of innovation for the U.K., and further the groundbreaking work being done by the nation’s researchers in critical healthcare and drug discovery.”

The company plans to dedicate Cambridge-1 resources in four areas, it said: industry research, in particular joint research on projects that exceed the resources of any single institution; university granted compute time; health-focused AI startups; and education for future AI practitioners. It’s already building specific applications in areas, like the drug discovery work it’s doing with GSK, that will be run on the machine.

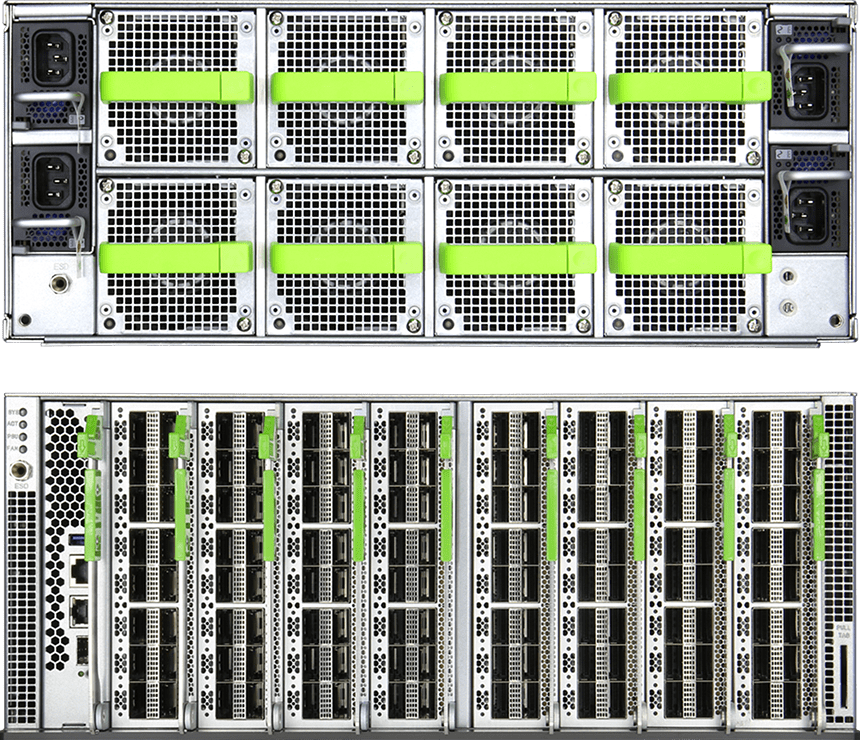

The Cambridge-1 will be built on Nvidia’s DGX SuperPOD system, which can process 400 petaflops of AI performance and 8 petaflops of Linpack performance. Nvidia said this will rank it as the 29th fastest supercomputer in the world.

“Number 29” doesn’t sound very groundbreaking, but there are other reasons why the announcement is significant.

For starters, it underscores how the supercomputing market — while still not a mass-market enterprise — is increasingly developing more focus around specific areas of research and industries. In this case, it underscores how health research has become more complex, and how applications of artificial intelligence have both spurred that complexity but, in the case of building stronger computing power, also provides a better route — some might say one of the only viable routes in the most complex of cases — to medical breakthroughs and discoveries.

It’s also notable that the effort is being forged in the U.K. Nvidia’s deal to buy Arm has seen some resistance in the market — with one group leading a campaign to stop the sale and take Arm independent — but this latest announcement underscores that the company is already involved pretty deeply in the U.K. market, bolstering Nvidia’s case to double down even further. (Yes, chip reference designs and building supercomputers are different enterprises, but the argument for Nvidia is one of commitment and presence.)

“AI and machine learning are like a new microscope that will help scientists to see things that they couldn’t see otherwise,” said Dr. Hal Barron, chief scientific officer and president, R&D, GSK, in a statement. “NVIDIA’s investment in computing, combined with the power of deep learning, will enable solutions to some of the life sciences industry’s greatest challenges and help us continue to deliver transformational medicines and vaccines to patients. Together with GSK’s new AI lab in London, I am delighted that these advanced technologies will now be available to help the U.K.’s outstanding scientists.”

“The use of big data, supercomputing and artificial intelligence have the potential to transform research and development; from target identification through clinical research and all the way to the launch of new medicines,” added James Weatherall, PhD, head of Data Science and AI, AstraZeneca, in his statement.

“Recent advances in AI have seen increasingly powerful models being used for complex tasks such as image recognition and natural language understanding,” said Sebastien Ourselin, head, School of Biomedical Engineering & Imaging Sciences at King’s College London. “These models have achieved previously unimaginable performance by using an unprecedented scale of computational power, amassing millions of GPU hours per model. Through this partnership, for the first time, such a scale of computational power will be available to healthcare research – it will be truly transformational for patient health and treatment pathways.”

Dr. Ian Abbs, chief executive & chief medical director of Guy’s and St Thomas’ NHS Foundation Trust Officer, said: “If AI is to be deployed at scale for patient care, then accuracy, robustness and safety are of paramount importance. We need to ensure AI researchers have access to the largest and most comprehensive datasets that the NHS has to offer, our clinical expertise, and the required computational infrastructure to make sense of the data. This approach is not only necessary, but also the only ethical way to deliver AI in healthcare – more advanced AI means better care for our patients.”

“Compact AI has enabled real-time sequencing in the palm of your hand, and AI supercomputers are enabling new scientific discoveries in large-scale genomic data sets,” added Gordon Sanghera, CEO, Oxford Nanopore Technologies. “These complementary innovations in data analysis support a wealth of impactful science in the U.K., and critically, support our goal of bringing genomic analysis to anyone, anywhere.”