French startup Dataiku has been profitable for the past three years. But the company wants to go further and is raising a $14 million Series A round led by FirstMark Capital with all existing investors also investing.

French startup Dataiku has been profitable for the past three years. But the company wants to go further and is raising a $14 million Series A round led by FirstMark Capital with all existing investors also investing.

Dataiku helps data scientists and data analysts manage huge sets of data to get some insights about what’s happening with their customers, suppliers, transactions and more. Read More

25

2016

Dataiku grabs $14 million for its collaborative data science platform

25

2016

HYPR raises $3 million to keep hackers from getting their hands on your fingerprints

If your account gets hacked these days, the first thing you’ll do typically is reset your password, maybe beef up your security settings, and proceed to use the account or app again. But what if hackers get their hands on your fingerprint, iris or other biometric data? You can’t just change your fingerprints or eyes on a whim. That’s where a New York City startup called… Read More

If your account gets hacked these days, the first thing you’ll do typically is reset your password, maybe beef up your security settings, and proceed to use the account or app again. But what if hackers get their hands on your fingerprint, iris or other biometric data? You can’t just change your fingerprints or eyes on a whim. That’s where a New York City startup called… Read More

24

2016

TokuDB and PerconaFT Data Files: Database File Management Part 2 of 2

This is the second installment of the blog series on TokuDB and PerconaFT data files. You can find my previous post here. In this post we will discuss some common file maintenance operations and how to safely execute these operations.

This is the second installment of the blog series on TokuDB and PerconaFT data files. You can find my previous post here. In this post we will discuss some common file maintenance operations and how to safely execute these operations.

Moving TokuDB data (table/index) files to another location outside of the default MySQL datadir

TokuDB uses the location specified by the tokudb_data_dir variable for all of its data files. If you don’t explicitly set the tokudb_data_dir variable, TokuDB uses the location specified by the servers datadir for these files.

The the __tokudb_lock_dont_delete_me_data file, located in the same directory as the TokuDB data files, protects TokuDB data files from concurrent process access.

TokuDB data files can be moved to other locations, and symlinks left behind in their place. If those symlinks refer to files on other physical data volumes, the tokudb_fs_reserve_percent monitor will not traverse the symlink and monitor the real location for adequate space in the file system.

To safely move your TokuDB data files:

- Shut down the server cleanly.

- Change the tokudb_data_dir in your my.cnf to the location where you wish to store your TokuDB data files.

- Create your new target directory.

- Move your *.tokudb files and your __tokudb_lock_dont_delete_me_data from the current location to the new location.

- Restart your server.

Moving the TokuDB temporary files to another location outside of the default MySQL datadir

TokuDB uses the location specified by the tokudb_tmp_dir variable for all of its temporary files. If you don’t explicitly set the tokudb_tmp_dir variable, TokuDB uses the location specified by the tokudb_data_dir. If you don’t explicitly set the tokudb_data_dir variable, TokuDB uses the location specified by the servers datadir for these files.

The __tokudb_lock_dont_delete_me_temp file, located in the same directory as the TokuDB temporary files, protects the TokuDB temporary files from concurrent process access.

If you locate your TokuDB temporary files on a physical volume that is different from where your TokuDB data files or recovery log files are located, the tokudb_fs_reserve_percent monitor will not monitor their location for adequate space in the file system.

To safely move your TokuDB temporary files:

- Shut the server down cleanly. A clean shutdown will ensure that there are no temporary files that need to be relocated.

- Change the tokudb_tmp_dir in your my.cnf to the location where you wish to store your new TokuDB temporary files.

- Create your new target directory.

- Move your __tokudb_lock_dont_delete_me_temp file from the current location to the new location.

- Restart your server.

Moving TokuDB recovery log files to another location outside of the default MySQL datadir

TokuDB uses the location specified by the tokudb_log_dir variable for all of its recovery log files. If the tokudb_log_dir variable is not explicitly set, TokuDB uses the location specified by the servers datadir for these files.

The __tokudb_lock_dont_delete_me_logs file, located in the same directory as the TokuDB recovery log files, protects TokuDB recovery log files from concurrent process access.

TokuDB recovery log files can be moved to another location and a symlinks left behind in place of the tokudb_log_dir. If that symlink refers to a directory on another physical data volume, the tokudb_fs_reserve_percent monitor will not traverse the symlink and monitor the real location for adequate space in the file system.

To safely move your TokuDB recovery log files:

- Shut the server down cleanly.

- Change the tokudb_log_dir in your my.cnf to the location where you wish to store your TokuDB recovery log files.

- Create your new target directory.

- Move your log*.tokulog* files and your __tokudb_lock_dont_delete_me_logs file from the current location to the new location.

- Restart your server.

I hope this blog series is helpful. Another post discussing a long-awaited new feature to help you manage and organize your TokuDB data files is coming soon.

24

2016

Announcing MyRocks in Percona Server for MySQL

Announcing MyRocks in Percona Server for MySQL.

RocksDB has been taking the world by the storm!

RocksDB counts Facebook, LinkedIn, Pinterest, Airbnb and Netflix among its users. It is the storage engine for many open source database technologies, including CockroachDB, Apache Flink, TiKV and Dgraph. It is also integrated with MySQL and MongoDB as the MyRocks and MongoRocks storage engines, respectively.

Percona was an early RocksDB supporter, as well as an early adopter. MongoRocks was included with Percona Server for MongoDB since its first GA version.

RocksDB has proven itself to be a very stable, high-performance technology. The enthusiastic, high-energy engineering team behind it cares not just about making it work for Facebook (which can be the case with some technologies built mainly for internal use), but also about satisfying the needs of the open source community at large. So it shouldn’t be a big surprise that RocksDB is getting such traction.

We have been watching RocksDB’s MySQL implementation, MyRocks, very closely. It’s been steadily getting better performance and adding more and more features – making it feasible for a broad range of general MySQL workloads (not just Facebook use cases).

What was missing, however, were large scale production deployments of MyRocks. There is a big difference between how a technology does in tests versus how it does “in the wild.” Facebook announced last month that they completed a large-scale migration from InnoDB to MyRocks, and now use MyRocks in production.

As a result, Percona decided it was time to bring MyRocks to Percona Server. Including MyRocks with Percona Server will help make it easily accessible to more MySQL users. I announced the addition of MyRocks to Percona Server during my keynote speech at this year’s Percona Live Europe.

The MySQL community’s reaction was overwhelmingly positive. They can’t wait to explore MyRocks, and see if it is right for their workload. Percona engineering teams are working as fast as possible to release the Beta version of Percona Server with MyRocks (with a GA soon to follow), but if you can’t wait that long you can check out experimental MyRocks Docker images here.

Reading this, many of you are likely wondering: when? In our experience, integrating a storage engine into database software is not a trivial task, and progress is often tricky. Completing 95% of the integration is easy enough (sufficient for most workloads). However, the last 5% of the integration – dealing with outliers and corner cases – can be quite difficult. You often discover more issues just when you think it’s done.

We know you have high expectations for Percona Server, and need it to handle the most demanding environments. With this in mind, we’re not going rush a Percona Server with MyRocks release. We will provide the experimental builds of MyRocks in Percona Server in Q1 2017, and we encourage you to start testing and experimenting so we can quickly release a solid GA version.

Percona’s purpose is to champion the best solutions for the open source community, and we think including MyRocks as an option for Percona Server for MySQL is a step in that direction.

24

2016

Microsoft to hike UK enterprise prices after Brexit pounds sterling

UK businesses that buy enterprise software or cloud services from Microsoft are facing a price hike from January 1. Read More

UK businesses that buy enterprise software or cloud services from Microsoft are facing a price hike from January 1. Read More

22

2016

Indie Author Day 2016

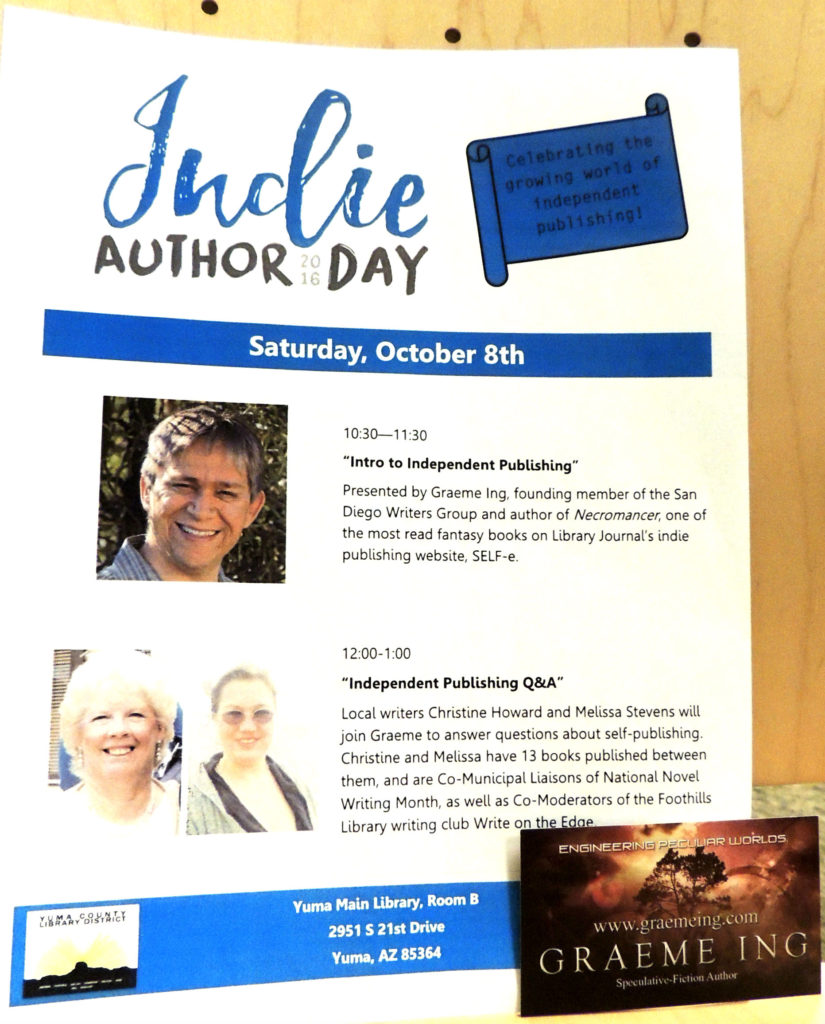

October 8th: Tamara and I drove out to Yuma, Az to take part in the Yuma County Library's Indie Author Day, organized by the SELF-e program.

October 8th: Tamara and I drove out to Yuma, Az to take part in the Yuma County Library's Indie Author Day, organized by the SELF-e program.

SELF-e is powered by Library Journal and acts as a kind of matchmaking service to help Indie authors get their ebooks into the library system. It's slowly rolling out across the US and Canada, so not every library is a part of the program yet. SELF-e contacted me a couple of years ago and asked if I was interested.

Um… yes! I grew up in libraries. They are a vital part of the local community, bringing the joy of books to everyone. I absolutely would love to support the libraries. I entered both my fantasy novels into the SELF-e program.

I was astonished in 2015 when they contacted me to tell me that my Necromancer had placed in the top 3 most-read Indie fantasy category. Wow!

Then earlier in 2016 they contacted me again asking if I wanted to be a part of Indie Author Day. Again… hello… yes! The plan was that I would give a 45 minute presentation as an intro to Indie Publishing and then take part in a Q&A panel with two other authors. It was a total blast and the audience asked fantastic questions.

Before we get to the photos, I want to thank Becky and Vanna at Yuma Central Library for organizing the event and making us feel so welcome. They bought us dinner the night before and were a crucial part of the whole event going smoothly. Thanks both of you! Wonderful hosts!

Here's the panel. In the center is PNR author Melissa Stevens and on the left, Christine Howard. I had a great time sharing the Q&A session with them. Very knowledgeable and friendly folk.

21

2016

Replication Triggers a Performance Schema Issue on Percona XtraDB Cluster

In this blog post, we’ll look at how replication triggers a Performance Schema issue on Percona XtraDB Cluster.

In this blog post, we’ll look at how replication triggers a Performance Schema issue on Percona XtraDB Cluster.

During an upgrade to Percona XtraDB Cluster 5.6, I faced an issue that I wanted to share. In this environment, we set up three Percona XtraDB Cluster nodes (mostly configured as default), copied from a production server. We configured one of the members of the cluster as the slave of the production server.

During the testing process, we found that a full table scan query was taking four times less in the nodes where replication was not configured. After reviewing mostly everything related to the query, we decided to use perf.

We executed:

perf record -a -g -F99 -p $(pidof mysqld) -- sleep 60

And the query in another terminal a couple of times. Then we executed:

perf report > perf.out

And we found in the perf.out this useful information:

# To display the perf.data header info, please use --header/--header-only options. # # Samples: 5K of event 'cpu-clock' # Event count (approx.): 57646464070 # # Children Self Command Shared Object Symbol # ........ ........ ....... .................. ............................................................................................................................................................ # 62.03% 62.01% mysqld mysqld [.] my_timer_cycles | ---my_timer_cycles 4.66% 4.66% mysqld mysqld [.] 0x00000000005425d4 | ---0x5425d4 4.66% 0.00% mysqld mysqld [.] 0x00000000001425d4 | ---0x5425d4 3.31% 3.31% mysqld mysqld [.] 0x00000000005425a7 | ---0x5425a7

As you can see, the

my_timer_cycles function

took 62.03% of the time. Related to this, we found a blog (http://dtrace.org/blogs/brendan/2011/06/27/viewing-the-invisible/) that explained how after enabling the Performance Schema, the performance dropped 10%. So, we decided to disable Performance Schema in order to see if this issue was related to the one described in the blog. We found that after the restart required by disabling Performance Schema, the query was taking the expected amount of time.

We also found out that this was triggered by replication, and nodes rebuilt from this member might have this issue. It was the same if you rebuilt from a member that was OK: the new member might execute the query slower.

Finally, you should take into account that

my_timer_cycles

seems to be called on a per-row basis, so if your dataset is small you will never notice this issue. However, if you are doing a full table scan of a million row table, you could face this issue.

Conclusion

If you are having query performance issues, and you can’t find the root cause, try disabling or debugging instruments from the Performance Schema to see if that is causing the issue.

21

2016

Percona Responds to East Coast DDoS Attack

As noted in several media outlets, many web sites have been affected by a DDoS attack on Dyn today. Since Percona uses Dyn for its DNS server, we are experiencing issues as well.

As noted in several media outlets, many web sites have been affected by a DDoS attack on Dyn today. Since Percona uses Dyn for its DNS server, we are experiencing issues as well.

The attack has impacted the percona.com web site availability and performance, including all related services such as our forums, blogs and downloads.

Our first response was to wait it out, and trust the Dyn team to deal with the attack — they have to handle issues like this all the time, and are generally pretty good at resolving these issues quickly. This was not the case today.

As such, to restore service, we have added another DNS provider (DNS Made Easy). This has restored connectivity for the majority of users, and the situation should continue to improve as the changed list of DNS servers propagates (check current status).

Our customer support site, Zendesk, has also been impacted by the DDoS attack. We are using a similar strategy to remedy our support site. You can see the current status for Zendesk’s domain resolution here.

For additional information about this incident from Dyn check out official Dyn incident status page

If you’re a Percona customer and have trouble accessing our Customer Support portal, do not hesitate to call or Skype us instead.

Thank you for your patience. We will provide updates as the situation develops.

21

2016

Percona Server 5.7.15-9 is now available

Percona announces the GA release of Percona Server 5.7.15-9 on October 21, 2016. Download the latest version from the Percona web site or the Percona Software Repositories.

Percona announces the GA release of Percona Server 5.7.15-9 on October 21, 2016. Download the latest version from the Percona web site or the Percona Software Repositories.

Based on MySQL 5.7.15, including all the bug fixes in it, Percona Server 5.7.15-9 is the current GA release in the Percona Server 5.7 series. Percona’s provides completely open-source and free software. Find release details in the 5.7.15-9 milestone at Launchpad.

New Features

- A new TokuDB

tokudb_dir_per_dboption was introduced to address two TokuDB shortcomings, the renaming of data files on table/index rename, and the ability to group data files together within a directory that represents a single database. This feature is enabled by default.

Bugs Fixed

- Audit Log Plugin malformed record could be written after

audit_log_flushwas set toONinASYNCandPERFORMANCEmodes. Bug fixed #1613650. - Running

SELECT DISTINCT x...ORDER BY y LIMIT N,Ncould lead to a server crash. Bug fixed #1617586. - Workloads with statements that take non-transactional locks (

LOCK TABLES, global read lock, and similar) could have caused deadlocks when running under Thread Pool with high priority queue enabled andthread_pool_high_prio_modeset totransactions. Fixed by placing such statements into the high priority queue even with the abovethread_pool_high_prio_modesetting. Bugs fixed #1619559 and #1374930. - Fixed memory leaks in Audit Log Plugin. Bug fixed #1620152 (upstream #71759).

- Server could crash due to a

glibcbug in handling short-lived detached threads. Bug fixed #1621012 (upstream #82886). QUERY_RESPONSE_TIME_READandQUERY_RESPONSE_TIME_WRITEwere returningQUERY_RESPONSE_TIMEtable data if accessed through a name that is not full uppercase. Bug fixed #1552428.- Cipher

ECDHE-RSA-AES128-GCM-SHA256was listed in the list of supported ciphers but it wasn’t supported. Bug fixed #1622034 (upstream #82935). - Successful recovery of a torn page from the doublewrite buffer was showed as a warning in the error log. Bug fixed #1622985.

- LRU manager threads could run too long on a server shutdown, causing a server crash. Bug fixed #1626069.

tokudb_defaultwas not recognized by Percona Server as a valid row format. Bug fixed #1626206.- InnoDB

ANALYZE TABLEdidn’t remove its table from the background statistics processing queue. Bug fixed #1626441 (upstream #71761). - Upstream merge for #81657 to 5.6 was incorrect. Bug fixed #1626936 (upstream #83124).

- Fixed multi-threaded slave thread leaks that happened in case of thread create failure. Bug fixed #1619622 (upstream #82980).

- Shutdown waiting for a purge to complete was undiagnosed for the first minute. Bug fixed #1616785.

Other bugs fixed: #1614439, #1614949, #1624993 (#736), #1613647, #1615468, #1617828, #1617833, #1626002 (upstream #83073), #904714, #1610102, #1610110, #1613728, #1614885, #1615959, #1616333, #1616404, #1616768, #1617150, #1617216, #1617267, #1618478, #1618819, #1619547, #1619572, #1620583, #1622449, #1623011, #1624992 (#1014), #735, #1626500, #1628913, #952920, and #964.

NOTE: If you haven’t already, make sure to add the new Debian/Ubuntu repository signing key.

The release notes for Percona Server 5.7.15-9 are available in the online documentation. Please report any bugs on the launchpad bug tracker .

20

2016

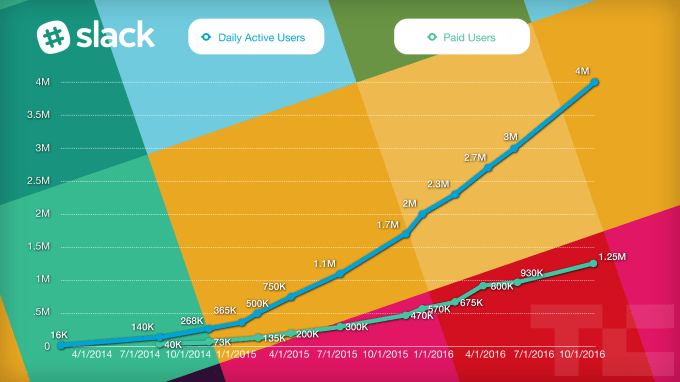

Slack’s rapid growth slows as it hits 1.25M paying work chatters

Slack is still growing fast, about 33% in daily users and paid seats in the last 5 months. But not as fast as before, when it saw 50% DAU growth and 63% paid seat growth in the 5.5 months from December 15th to May 25th. This aligns with rumors TechCrunch has heard about a slight dip in customer retention at Slack, as the casual, GIF-filled workplace chat app can get noisy and distracting… Read More

Slack is still growing fast, about 33% in daily users and paid seats in the last 5 months. But not as fast as before, when it saw 50% DAU growth and 63% paid seat growth in the 5.5 months from December 15th to May 25th. This aligns with rumors TechCrunch has heard about a slight dip in customer retention at Slack, as the casual, GIF-filled workplace chat app can get noisy and distracting… Read More