Artificial intelligence technology holds a huge amount of promise for enterprises — as a tool to process and understand their data more efficiently; as a way to leapfrog into new kinds of services and products; and as a critical stepping stone into whatever the future might hold for their businesses. But the problem for many enterprises is that they are not tech businesses at their core, so bringing on and using AI will typically involve a lot of heavy lifting. Today, one of the startups building AI services is announcing a big round of funding to help bridge that gap.

SambaNova — a startup building AI hardware and integrated systems that run on it that only officially came out of three years in stealth last December — is announcing a huge round of funding today to take its business out into the world. The company has closed on $676 million in financing, a Series D that co-founder and CEO Rodrigo Liang has confirmed values the company at $5.1 billion.

The round is being led by SoftBank, which is making the investment via Vision Fund 2. Temasek and the government of Singapore Investment Corp. (GIC), both new investors, are also participating, along with previous backers BlackRock, Intel Capital, GV (formerly Google Ventures), Walden International and WRVI, among other unnamed investors. (Sidenote: BlackRock and Temasek separately kicked off an investment partnership yesterday, although it’s not clear if this falls into that remit.)

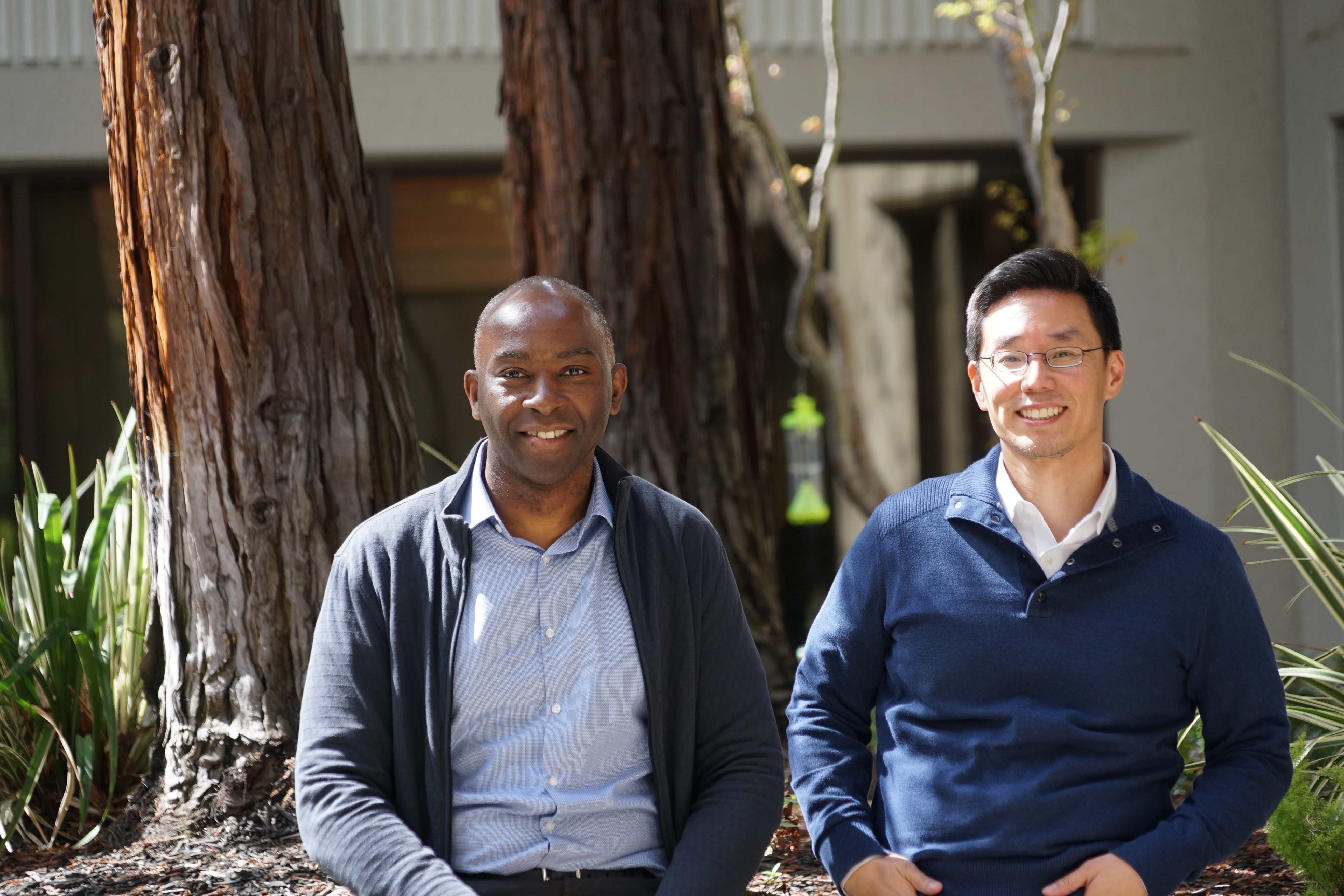

Co-founded by two Stanford professors, Kunle Olukotun and Chris Ré, and Liang, who had been an engineering executive at Oracle, SambaNova has been around since 2017 and has raised more than $1 billion to date — both to build out its AI-focused hardware, which it calls DataScale, and to build out the system that runs on it. (The “Samba” in the name is a reference to Liang’s Brazilian heritage, he said, but also the Latino music and dance that speaks of constant movement and shifting, not unlike the journey AI data regularly needs to take that makes it too complicated and too intensive to run on more traditional systems.)

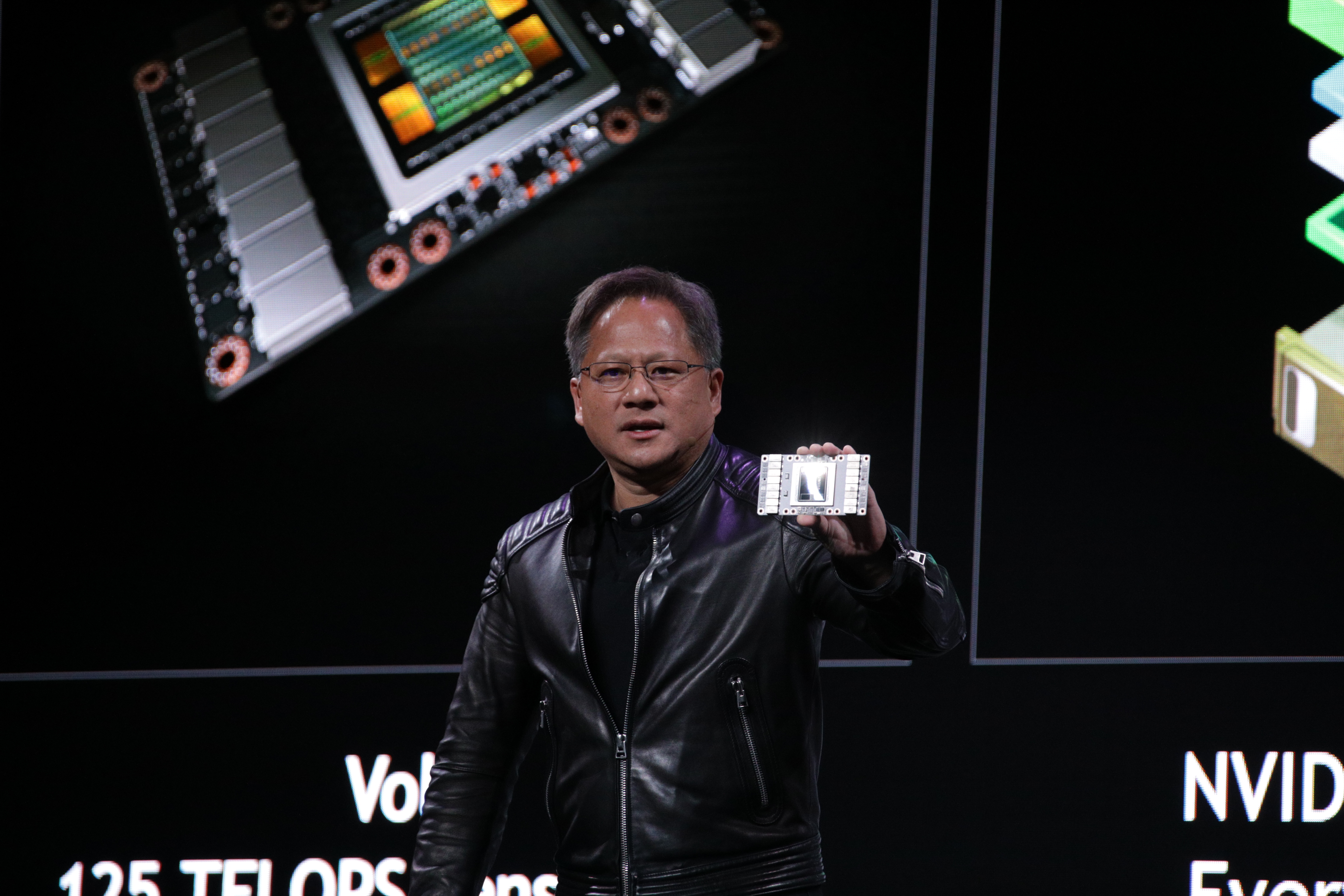

SambaNova on one level competes for enterprise business against companies like Nvidia, Cerebras Systems and Graphcore — another startup in the space which earlier this year also raised a significant round. However, SambaNova has also taken a slightly different approach to the AI challenge.

In December, the startup launched Dataflow-as-a-Service as an on-demand, subscription-based way for enterprises to tap into SambaNova’s AI system, with the focus just on the applications that run on it, without needing to focus on maintaining those systems themselves. It’s the latter that SambaNova will be focusing on selling and delivering with this latest tranche of funding, Liang said.

SambaNova’s opportunity, Liang believes, lies in selling software-based AI systems to enterprises that are keen to adopt more AI into their business, but might lack the talent and other resources to do so if it requires running and maintaining large systems.

“The market right now has a lot of interest in AI. They are finding they have to transition to this way of competing, and it’s no longer acceptable not to be considering it,” said Liang in an interview.

The problem, he said, is that most AI companies “want to talk chips,” yet many would-be customers will lack the teams and appetite to essentially become technology companies to run those services. “Rather than you coming in and thinking about how to hire scientists and hire and then deploy an AI service, you can now subscribe, and bring in that technology overnight. We’re very proud that our technology is pushing the envelope on cases in the industry.”

To be clear, a company will still need data scientists, just not the same number, and specifically not the same number dedicating their time to maintaining systems, updating code and other more incremental work that comes managing an end-to-end process.

SambaNova has not disclosed many customers so far in the work that it has done — the two reference names it provided to me are both research labs, the Argonne National Laboratory and the Lawrence Livermore National Laboratory — but Liang noted some typical use cases.

One was in imaging, such as in the healthcare industry, where the company’s technology is being used to help train systems based on high-resolution imagery, along with other healthcare-related work. The coincidentally-named Corona supercomputer at the Livermore Lab (it was named after the 2014 lunar eclipse, not the dark cloud of a pandemic that we’re currently living through) is using SambaNova’s technology to help run calculations related to some COVID-19 therapeutic and antiviral compound research, Marshall Choy, the company’s VP of product, told me.

Another set of applications involves building systems around custom language models, for example in specific industries like finance, to process data quicker. And a third is in recommendation algorithms, something that appears in most digital services and frankly could always do to work a little better than it does today. I’m guessing that in the coming months it will release more information about where and who is using its technology.

Liang also would not comment on whether Google and Intel were specifically tapping SambaNova as a partner in their own AI services, but he didn’t rule out the prospect of partnering to go to market. Indeed, both have strong enterprise businesses that span well beyond technology companies, and so working with a third party that is helping to make even their own AI cores more accessible could be an interesting prospect, and SambaNova’s DataScale (and the Dataflow-as-a-Service system) both work using input from frameworks like PyTorch and TensorFlow, so there is a level of integration already there.

“We’re quite comfortable in collaborating with others in this space,” Liang said. “We think the market will be large and will start segmenting. The opportunity for us is in being able to take hold of some of the hardest problems in a much simpler way on their behalf. That is a very valuable proposition.”

The promise of creating a more accessible AI for businesses is one that has eluded quite a few companies to date, so the prospect of finally cracking that nut is one that appeals to investors.

“SambaNova has created a leading systems architecture that is flexible, efficient and scalable. This provides a holistic software and hardware solution for customers and alleviates the additional complexity driven by single technology component solutions,” said Deep Nishar, senior managing partner at SoftBank Investment Advisers, in a statement. “We are excited to partner with Rodrigo and the SambaNova team to support their mission of bringing advanced AI solutions to organizations globally.”

Henry, too, is a big TensorFlow fan. And for good reason: it’s because of frameworks like TensorFlow that allow next-generation chip ideas to even get off the ground in the first place. These kinds of frameworks, which have become increasingly popular with developers, have abstracted out the complexity of working with specific low-level hardware like a field programmable gate array (FPGA) or a GPU. That’s made building machine learning-based operations much easier for developers and led to an explosion of activity when it comes to machine learning, whether it’s speech or image recognition among a number of other use cases.

Henry, too, is a big TensorFlow fan. And for good reason: it’s because of frameworks like TensorFlow that allow next-generation chip ideas to even get off the ground in the first place. These kinds of frameworks, which have become increasingly popular with developers, have abstracted out the complexity of working with specific low-level hardware like a field programmable gate array (FPGA) or a GPU. That’s made building machine learning-based operations much easier for developers and led to an explosion of activity when it comes to machine learning, whether it’s speech or image recognition among a number of other use cases.

While some of the largest chip manufacturers are looking to shift their focus onto the GPU for their biggest machine learnings, there’s a blooming ecosystem of new chip startups looking to rethink the way processing for AI works That includes a European-based startup called Graphcore, which said today that it has raised $50 million in new financing led by Sequoia Capital — following…

While some of the largest chip manufacturers are looking to shift their focus onto the GPU for their biggest machine learnings, there’s a blooming ecosystem of new chip startups looking to rethink the way processing for AI works That includes a European-based startup called Graphcore, which said today that it has raised $50 million in new financing led by Sequoia Capital — following…