ERROR 1040…again

ERROR 1040…again

A pretty common topic in Support tickets is the rather infamous error: ERROR 1040: Too many connections. The issue is pretty self-explanatory: your application/users are trying to create more connections than the server allows, or in other words, the current number of connections exceeds the value of the max_connections variable.

This situation on its own is already a problem for your end-users, but when on top of that you are not able to access the server to diagnose and correct the root cause, then you have a really big problem; most times you will need to terminate the instance and restart it to recover.

Root user can’t connect either! Why!?

In a properly set up environment, a user with SUPER privilege will be able to access the instance and diagnose the error 1040 problem that is causing connection starvation, as explained in the manual:

mysqld actually permits

max_connections+ 1 client connections. The extra connection is reserved for use by accounts that have theSUPERprivilege. By granting the privilege to administrators and not to normal users (who should not need it), an administrator who also has thePROCESSprivilege can connect to the server and useSHOW PROCESSLISTto diagnose problems even if the maximum number of unprivileged clients are connected.

But we see lots of people who give SUPER privileges to their application or script users, either due to application requirements (dangerous!) or due to lack of knowledge regarding the consequences, but the case is then that the reserved connection is taken by a regular user, and your administrative user (usually root) won’t be able to connect.

How to guarantee access to the instance

Besides resorting to the well known GDB hack devised by Aurimas long ago for Error 1040, there are now better solutions, but you need to enable them first.

With Percona Server 5.5.29 and up, and with MySQL 8.0.14 and up, you can set up an extra port that allows a number of extra connections. These additional interfaces will not be used by your applications; they are only for your database administrators and monitoring/health-check agents (see note on this further below).

Setting up in Percona Server

Starting with Percona Server 5.5.29, you can simply add extra_port to your my.cnf and the next time you restart the port will become available and will listen on the same bind_address as regular connections. If you don’t set the extra_port variable, no additional port will be available by default.

You can also define extra_max_connections which sets the number of connections this port will handle. The default value for this is 1.

For a quick demo, I have saturated connections on the regular users port of an instance where I already have set extra_port and extra_max_connections in the my.cnf:

~ egrep 'port|extra' my.sandbox.cnf

port = 45989

extra_port = 45999

extra_max_connections = 10

# attempt to show some variables

~ mysql --host=127.0.0.1 --port=45989 --user=msandbox --password -e "SHOW GLOBAL VARIABLES WHERE Variable_name IN ('port', 'extra_port')"

ERROR 1040 (HY000): Too many connections

# now again, through the extra_port

~ mysql --host=127.0.0.1 --port=45999 --user=msandbox --password -e "SHOW GLOBAL VARIABLES WHERE Variable_name IN ('port', 'extra_port')"

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| extra_port | 45999 |

| port | 45989 |

+---------------+-------+

Note that extra_port has been removed in Percona Server 8.0.14 and newer since MySQL Community has implemented admin_port which duplicates this functionality. So make sure to edit your my.cnf when upgrading to Percona Server 8.0.14 or newer if you already have extra_port defined there!

Setting up in MySQL Community

As mentioned, this requires MySQL 8.0.14 where WorkLog 12138 was implemented.

To enable the Admin Interface you have to define the admin_addres, which must be a single and unique (no wildcards allowed) IPv4, IPv6, IPv4-mapped, or hostname on which the admin interface will listen. If this variable is not defined, then the interface is not enabled at all.

You can also define a port, but it’s not mandatory and it defaults to 33062. So if that port is free then you don’t need to configure it. When defined, both variables should be placed under the [mysqld] section of your my.cnf.

Finally, you can also set create_admin_listener_thread (disabled by default) which will create a separate thread for incoming connection handling, which can be helpful in some situations.

Another difference is that Oracle’s documentation claims that:

There is no limit on the number of administrative connections.

(This is in contrast with our default of 1). I am not sure what this means, but I would be careful making sure you don’t accidentally establish 1,000,000 connections as they might not be limited but would still consume resources!

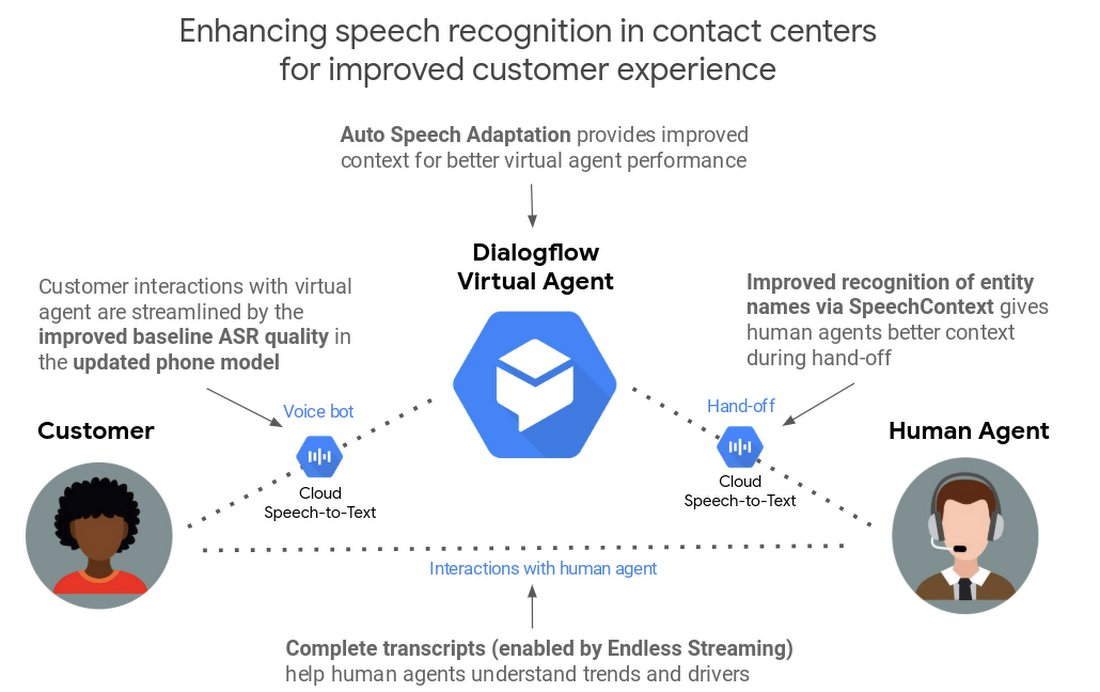

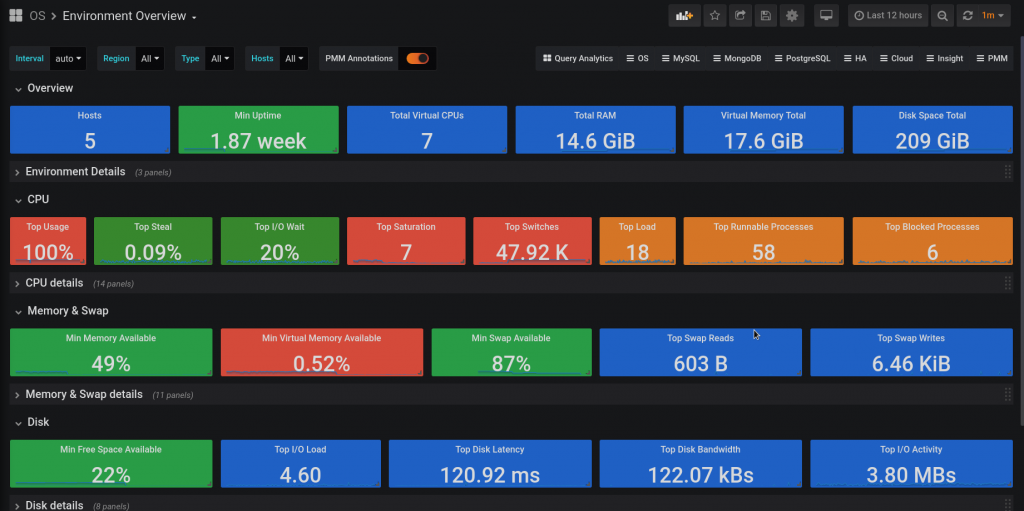

Using it for monitoring and health-checks

A very useful thing is that not only humans can use the extra interface/port during emergency cases where max_connections has been reached; it can also be used by your monitoring system and your proxy/load balancer/service discovery health-check probes.

Monitoring scripts can still pull data for your graphs to later understand why the connection pile up happened. And your health-check scripts could report the degraded state of the server, possibly with a particular code indicating connections are saturated but the server is responsive (meaning it could clear on its own, so it might be worth allowing a longer timeout to failover).

As a warning: make sure to establish only one single connection at a time for monitoring/health probes, to avoid filling up the extra_max_connections in Percona Server or to avoid creating one million threads in MySQL. In other words, your scripts should not connect again if the previous query/connection to the database is still ongoing.

And here is the same demo as before with MySQL:

~ grep admin_ my.sandbox.cnf

admin_address = 127.0.0.1

admin_port = 34888

# regular port

~ mysql --host=127.0.0.1 --port=35849 --user=msandbox --password -e "SHOW GLOBAL VARIABLES WHERE Variable_name IN ('port', 'admin_address', 'admin_port');"

Enter password:

ERROR 1040 (HY000): Too many connections

# admin interface and port

~ mysql --host=127.0.0.1 --port=34888 --user=msandbox --password -e "SHOW GLOBAL VARIABLES WHERE Variable_name IN ('port', 'admin_address', 'admin_port');"a

Enter password:

+---------------+-----------+

| Variable_name | Value |

+---------------+-----------+

| admin_address | 127.0.0.1 |

| admin_port | 34888 |

| port | 35849 |

+---------------+-----------+

Note that for Percona Server 8.0.14 and newer, the process will be the same as for MySQL Community.

Help! I need to login but I don’t have an extra port!

If this is the reason you are reading this post, then you can either follow the crazy GDB hack (no offense meant, Aurimas! Just seems risky :-D) or terminate the instance. The good part is that (most times) you can terminate the instance in a clean fashion by using SIGTERM (-15) instead of SIGKILL (-9). This will tell the server it should perform a clean shutdown, which will give threads a chance to exit gracefully. To do so simply run these:

1) Get PID

marcos.albe in ~/ pgrep -x mysqld; 650

2) And then send SIGTERM to that PID:

marcos.albe in ~/ kill -15 650;

3) You can now tail the error log to watch the shutdown happening; You should see a sequence like

2019-07-11T13:43:28.421244Z 0 [Note] Giving 0 client threads a chance to die gracefully 2019-07-11T13:43:28.521238Z 0 [Note] Shutting down slave threads 2019-07-11T13:43:28.521272Z 0 [Note] Forcefully disconnecting 0 remaining clients

That signals the beginning of the shutdown sequence. Then you should wait for a line like the one below to appear, to know the shutdown is complete:

2019-07-11T13:43:31.292836Z 0 [Note] /opt/percona_server/5.7.26/bin/mysqld: Shutdown complete